From the Microsoft Research article:

People can be nostalgic and often cherish the happy moments in revisiting old times. There is a strong desire to bring old photos back to their original quality so that people can feel the nostalgia of travelling back to their childhood or experiencing earlier memories. However, manually restoring old photos is usually laborious and time consuming, and most users may not be able to afford these expensive services. Researchers at Microsoft recently proposed a technique to automate this restoration process, which revives old photos with compelling quality using AI. Different from simply cascading multiple processing operators, we propose a solution specifically for old photos and thus achieve far better results. This work will be presented in an upcoming CVPR 2020 presentation.

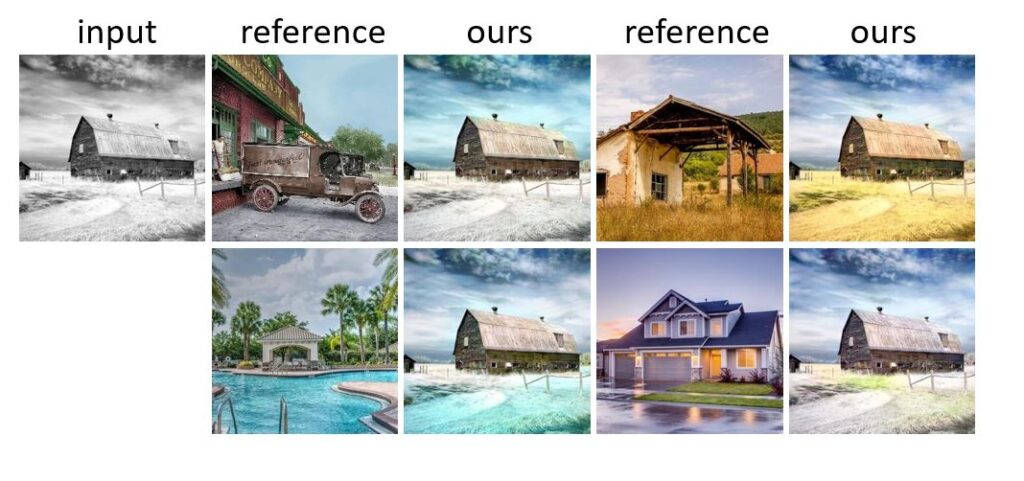

With the emergence of deep learning, one can address a variety of low-level image restoration problems by exploiting the powerful representation capability of convolutional neural networks, that is, learning the mapping for a specific task from a large amount of synthetic images. The same framework, however, does not apply to old photo restoration. This is because old photos are plagued with multiple degradations (such as scratches, blemishes, color fading, or film noises) and there exists no degradation model that can realistically render old photo artifacts (Figure 5). Also, photography technology consistently evolves, so photos of different eras demonstrate different artifacts. As a result, the domain gap between the synthetic images and real old photos makes the network fail to generalize.

We formulate old photo restoration as a triplet domain translation problem. Specifically, we leverage data from three domains: the real photo domain R, the synthetic domain X where images suffer from artificial degradation, and the corresponding ground truth domain Y that comprises images without degradation. The synthetic images in R have their correspondence counterpart in Y. We learn the mapping X→Y in the latent space. Such triplet domain translation (Figure 2) is crucial to our task as it leverages the unlabeled real photos as well as a large amount of synthetic data associated with ground truth.

And so on. There are loads of diagrams and formulae. You don't need to understand most of it - but it's good to know that clever folk are continuing to push the boundaries of image analysis. So that, in Windows Photos or similar, in a few years time, when you hit 'Auto-fix' after scanning an old print of your grandmothers, true imaging magic just may be possible!

(via)