The 'megapixel myth', in short, is that the overall quality of images from a camera is proportional to the number of megapixels in its sensor. It's a marketing trick that's usually deployed to good effect on bullet point lists in High Street shops, e.g. "16 Megapixels" or "20.7MP" or similar. The average buyer sees a higher number and automatically assumes that more is better.

Often erroneously so, of course. Whether looking at standalone cameras or camera phones, the quality of photos taken depends on a lot of factors, including:

- Sensor size

- Sensor resolution

- Aperture size

- Quality of optics

- Quality of image processing electronics and algorithms

- Skill of person taking the shot (i.e. not making basic mistakes!)

So, yes, resolution's in the mix somewhere, but even then it's not a given that the higher the resolution, the better the photos. For example, for a given physical size of sensor (and current flagships are trending towards a 1/2.5" rectangular sensor), the more the number of pixels, the smaller each one has to be (they go down to 1.1 microns, currently, i.e. millionths of a metre). Which means that it gathers less light and thus you get more digital noise. I've seen this a lot in the smartphone world. (I'll give a shout out to the LG G4 as an exception, since its low noise and colour accuracy in ts 16MP sensor is astonishingly good, but until we've a) all worked out how LG has achieved this, and b) thought of a use for all those pixels, then I think my points and this feature still stand.)

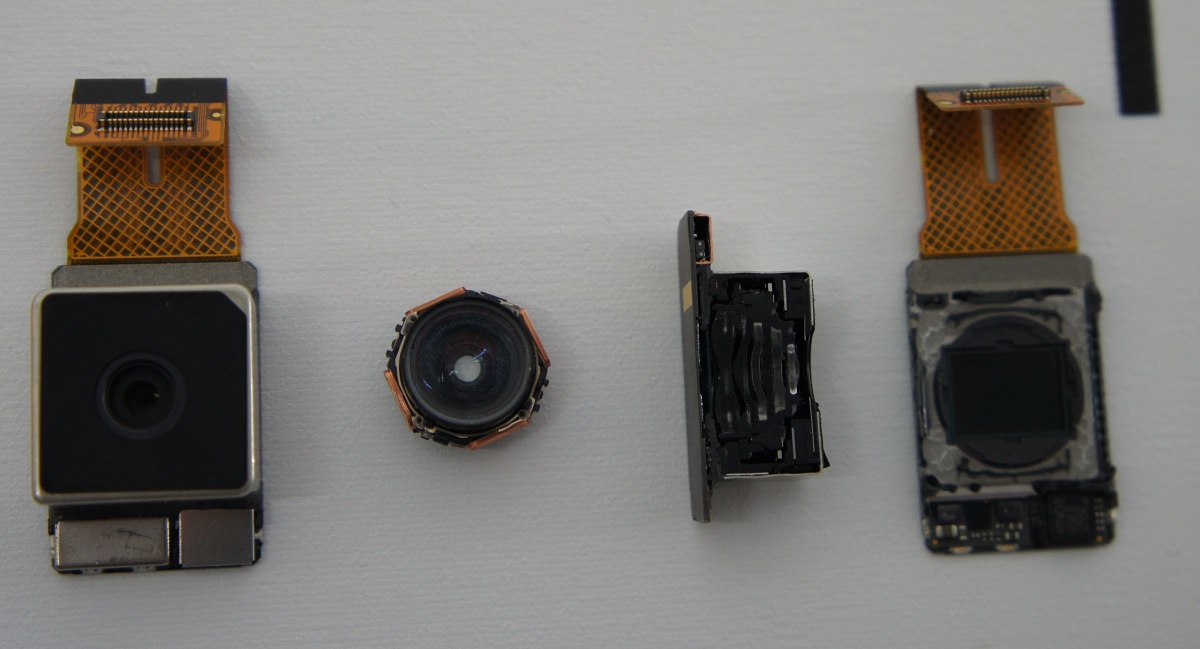

Inside a typical 2015 smartphone camera, using the Lumia 1020 (mentioned below) as an example. From left to right, the camera module, part of the moving lens mechanism, a cross-section through the module, showing the lenses, and the backplane, showing the sensor matrix itself.

The concept of a 'sweet spot' is very valid here, of course. It's tempting to think 'Well, if larger pixels are better, let's go for a low Megapixel count from the start'. This is what HTC did with its One M7 and M8, with 'ultrapixels' and the results were almost always disappointing. it's all to do with Nyquist frequencies and the sheer amount (or lack) of raw information, you see. As I reported here, quoting a conference article:

Typically the goal in optimizing the camera resolution is to match the pixel size with smallest resolution element that the optical system is capable of producing. In terms of sampling theorem, the pixel pitch defines the spatial sampling frequency, fS, and thereby Nyquist frequency, fN = ½*fS of the imaging system. Nyquist frequency defines the frequency above which aliasing can happen, but it doesn’t yet tell at what frequency image details can be resolved.

Which is a lot of mumbo jumbo but boils down (if you read the original text) to needing higher resolution of original data for a 5MP (or 4MP in the HTC's case) photo than simply having a 5MP (or 4MP) sensor. In layman's terms, for best detail at any given resolution, you really need to process data from double that resolution.

At the other end of the scale, 20MP sensors (as about to be pushed by Microsoft for the likes of the Lumia 930 and 1520 if you use the default Camera application in Windows 10 Mobile, but also a resolution being trended towards by other manufacturers) are clearly - I contend - far too high, at least to be used natively, i.e. the pixels are too small and it's mainly pain and little gain. A higher resolution means:

- higher digital noise per pixel

- larger file sizes, eating up storage faster and requiring longer download times for others when sharing

- higher processor load per shot

- potentially longer shot to shot times

- slower processing when adding filters and tune-ups later on

So... 4MP is clearly too low, at least in HTC's admittedly flawed implementation. While 20MP and beyond is too high.

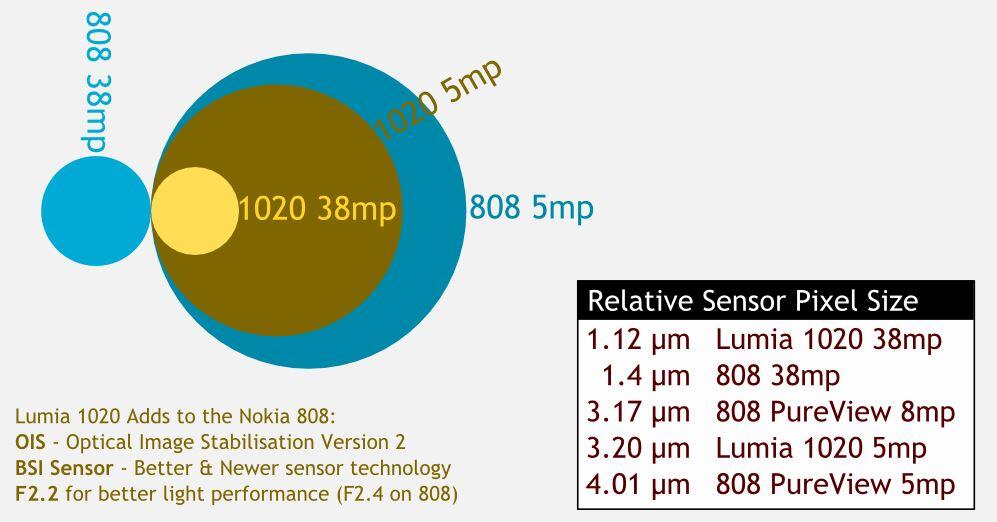

Now, before someone writes in, in the comments, I should emphasise that the '41MP' resolution of the Nokia 808 PureView and Lumia 1020 is just the underlying resolution of their sensors, remember that mumbo jumbo about Nyquist frequencies above? The PureView algorithms (in the 808 or 1020, etc.) are there in each case to 'oversample' from this massive amount of data down to 'purer' pixels, outputting at 5MP, but hopefully with almost zero noise as a result of the conversion of each group of 6 or 7 pixels' data (on average) down to individual pixel data in the eventual output.

For comparison, the smallest pixel size here, 1.1 microns, is what's used on most current (flagship) smartphone cameras. The point to take away here is just how much larger the oversampled (virtual) 'super-pixels' are on the 1020 and 808....

At this point in debunking the megapixel myth when considering resolution, a very good question to ask is what the photos we take will be used for. After all, if they're for a Facebook page then a 1MP image is more than adequate. At the other end of the scale, perhaps we might want to display them on a 4K television, brand new over the last couple of years and sure to become the standard over the next five. It may surprise you to know that a '4K' television, in terms of the number of pixels (3840 x 2160), equates only to 8 Megapixels.

To restate this, even if you 'only' have a 8MP image, you've still got enough resolution to match every single tiny pixel on a cutting edge 4K TV. In fact, if you do the maths, with high quality conventional ink jet printers, printing photos out on photo paper at, say, 8"x6" still only requires about the same resolution, i.e. 8MP. In fact, is there any application/use/display in the world that requires higher resolution than this? I'm struggling to think of one.

Of course, one possibility with high megapixel images is that you can, as a matter of course, crop the image down and still end up with a photo with pretty high resolution (this is in fact, effectively what 'reframe' does on the PureView Lumias). This is a valid use case, though I'm not sure how many people actually try this - most users will try and frame a photo properly at capture time.

So 8MP is all you need then? Well, not quite.

If I were to pick a sweet spot right now for resolution it would be 10MP for 16:9 images, which is what is thrown out by your average '13MP' sensor in this aspect ratio (think the Lumia 640 XL's rather excellent camera, shown above). 10MP is more than enough for 4K displays, and high enough that there's enough genuine (Nyquist) detail at 5MP and still room for some cropping, while being low enough that pixels never drop below a micron on an average 1/3" sensor and I'd like to see 1.4 micron pixels on a 1/2.5" chip, ideally.

So the next time manufacturer X launches smartphone Y with a 16MP, 20MP or even 25MP (you know it's going to happen) sensor and outputting at those same resolutions*, just yawn and move on. They're starting to become pixels for the sake of it, you're not gaining much of use in the real world and are losing out in power and storage.

Postscript: The above sweet spot assumes no oversampling intelligence, of course. The current Lumia 1520, 930 and Icon all use a 20MP sensor, but oversample down in a clever (PureView) way, I'm assuming the rumoured Lumia 950/XL will do the same, hopefully to 8MP, though, in 2015. In the Android world, Sony already outputs an 8MP image from their 20MP sensored Xperia flagships, but in my testing their oversampling didn't seem as effective as in the Nokia units. However, there's nothing stopping other manufacturers from also having a crack at this concept, even if they can't use the 'PureView' registered trademark. After all, every digital camera does a degree of sampling from its sensor grid/mosaic in the Bayer filter process (from a typical RGBG pixel layout to chrominance and luminance values that make sense for the human eye), so why not integrate data from a wider pool of surrounding pixels at each stage, i.e. oversampling?

In principle then, with oversampling on-board, the sweet spot can stay at a 4K-friendly 8MP across all phone cameras but with each manufacturer quoting something along the lines of "8MP HQ output from a 13MP/20MP/25MP sensor" or whatever. Clumsier for the marketeers then, but more efficient and with better image quality for everyone.